In a recent post, I wrote about the pitfalls of taking your data at face value and last week, I blogged about not knowing the true impact of critical organizational attributes without diving more deeply into the data (read: do more than explore it visually or look at up/down trends).

My business partner sent me an excellent article this morning by Stacy Harris that asks the question: Are You Considering Firing Your Employee Engagement Partner? I’ve been thinking a lot lately about data and how to make sense of it and I recommend this article for anyone else on a data journey. I think Stacy’s research applies to any type of data collection method. I’m summarizing what I took away from the article:

It’s not enough to have a survey; you need a strategy. A stand-alone survey gives you just that: one view whereas designing a survey that supports a business strategy provides data that can be aggregated with other data sets for a more accurate and complete picture.

If you ask generic questions, you get smiley or frown-y answers but no insight. Whether you have 12 questions or 40, if they don’t speak to your culture, your workforce or your targeted customers, I’m hard pressed to see how you will get any “aha’s” that are worth the time and expense of a survey.

What specifically do you want from a survey or any “listening post” that you design? “Because we’ve always done one” or “Because everyone else does it” are not specific objectives. What business issue do you hope to resolve? What’s keeping you or your boss up at night?

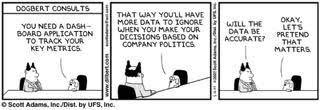

How are you analyzing the data? Scores alone may not tell you what you need to know. Trends without relationship to some rationale are just up or down points on a chart. Desktop tools exist to assist. Help is available (call me).

If you’re hung up on benchmarks, you may never get to the “why” of your own project. I know that some organizations swear by a benchmark study and who can argue, as long as the benchmarks map precisely to your own situation and as long as it’s not your only measure. Like generic questions, without the appropriate construct, a benchmark exercise can leave you with no specific roadmap for your success.

Collecting data is a critical component of every function these days. It's a project like any other, with objectives, outcomes and measures. Is your data giving you what you need?